Synthesizing Scene-Aware Virtual Reality Teleport Graphs

Changyang Li Haikun Huang Jyh-Ming Lien Lap-Fai Yu

George Mason University

Abstract

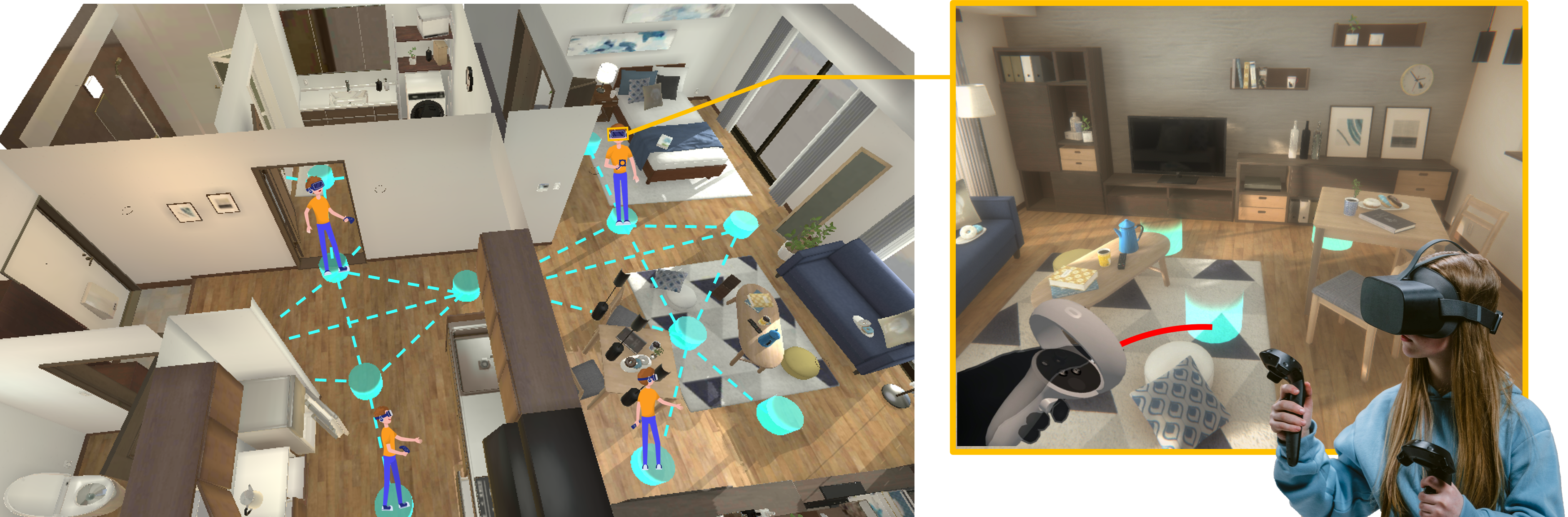

We present a novel approach for synthesizing scene-aware virtual reality teleport graphs, which facilitate navigation in indoor virtual environments by suggesting desirable teleport positions. Our approach analyzes panoramic views at candidate teleport positions by extracting scene perception graphs, which encode scene perception relationships between the observer and the surrounding objects, and predict how desirable the views at these positions are. We train a graph convolutional model to predict the scene perception scores of different teleport positions. Based on such predictions, we apply an optimization approach to sample a set of desirable teleport positions while considering other navigation properties such as coverage and connectivity to synthesize a teleport graph. Using teleport graphs, users can navigate virtual environments efficaciously. We demonstrate our approach for synthesizing teleport graphs for common indoor scenes. By conducting a user study, we validate the efficacy and desirability of navigating virtual environments via the synthesized teleport graphs. We also extend our approach to cope with different constraints, user preferences, and practical scenarios.

Keywords

Teleportation, Virtual Reality, Navigation, Graph Convolutional Networks.

Publication

Synthesizing Scene-Aware Virtual Reality Teleport Graphs

Changyang Li,

Haikun Huang,

Jyh-Ming Lien,

Lap-Fai Yu

ACM Transactions on Graphics (Proceeding of SIGGRAPH Asia 2021)

Paper

, Supplementary Material

BibTex

@article{teleport,

title={Synthesizing Scene-Aware Virtual Reality Teleport Graphs},

author = {Changyang Li and Haikun Huang and Jyh-Ming Lien and Lap-Fai Yu},

journal = {ACM Transactions on Graphics (TOG)},

volume = {40},

number = {6},

pages={1--15},

year = {2021},

publisher={ACM New York, NY, USA}

}

Acknowledgement

We are grateful to the anonymous reviewers for their constructive comments. We thank Amilcar Gomez Samayoa and Javier Talavera for their help with the user study. This project was supported by an NSF CAREER Award (award number: 1942531).